AI technology is changing global banking and financial services, with new commercial opportunities, and new criminal risks, prompting governments to reconsider their positions on supervision and legislation. Regulator attitudes reflect that shift, with some supervisory bodies leading with overarching AI compliance frameworks or, alternatively, taking a principles-based approach. Meanwhile, others are holding off completely, waiting instead for more data, and more insight, to better shape their response.

AI Regulations: Compliance Challenges

The pace of AI innovation, and the diversity of regulatory perspectives, have made many firms reluctant to adopt the technology within existing compliance infrastructure. Aware of that hesitancy, both governments and supervisory bodies are working to develop their technical expertise in order to make informed decisions, and ultimately, implement better AI laws.

With that in mind, many regulators have indicated that they understand the potential of AI to enhance anti-financial crime (AFC) efforts, including the promise of powerful new capabilities to detect and prevent damaging activities such as money laundering and the financing of terrorism. The UK’s Financial Conduct Authority (FCA), for example, has stated that it is ready to “make the UK the global hub of AI innovation”, while the government of Singapore re-launched its National AI Strategy in 2024, stating that it wants the city to be “a place where AI is used to uplift and empower” people and businesses.

Regulator efforts to nurture AI innovation demonstrate a broad acceptance of the potential of the technology to enhance compliance. It also means that the regulatory landscape will continue to evolve rapidly, and that firms should be ready to adapt to changing rules.

Global Perspectives

Let’s take a look at the current AI perspectives of key global regulators.

The Financial Action Task Force

As an inter-governmental body, the Financial Action Task Force (FATF) makes regulatory recommendations that governments must implement domestically. The FATF’s most recent guidance on AI regulation is set out in its 2021 publication Opportunities and Challenges of New Technologies for AML/CFT.

In that document, the FATF explores the potential for AI to enhance implementation of its anti-money laundering (AML) and counter-financing of terrorism (CFT) standards, not least by making firms’ compliance efforts “faster, cheaper, and more effective”. The report focuses on the technical compliance possibilities of subsets of AI, such as machine learning and natural language processing, that can help firms screen customers against vast data sets, recognise patterns that human compliance teams might miss, make predictions and recommendations, and facilitate stronger decision-making.

While emphasising the potential for positive change, the FATF has also cautioned that AI compliance innovations must offer sufficient explainability and transparency. Those factors are critical in investigative contexts given the need for data to be scrutinised and verified by regulators, authorities and auditors.

The European Union

The EU has been relatively proactive in its approach to AI regulation, opting to develop an overarching legal framework as early as 2021. On 9 December 2023, the EU Parliament reached a provisional deal on its AI Act, which was characterised as a landmark bill and the first of its kind in the world. The EU Council adopted the AI Act on 21 May 2024, and the regulation is expected to come into effect across the EU at some point in Q3 2024.

The Act will serve as an industry-agnostic, risk-based framework that will pave the way for the EU to shape the use of AI and address its risks. The EU’s stated goal is to ensure that use of AI is “safe, transparent, traceable, non-discriminatory and environmentally friendly,” and that it continues to be “overseen by people”, rather than automation.

Under the EU’s new regime, national regulators will classify AI systems by risk, and apply proportionate compliance requirements. The EU has set out an implementation timeline for the AI Act which will see certain aspects of the legislation come into force up to 2030, including the addition of AI literacy requirements and prohibited AI practices.

The United Kingdom

The Financial Conduct Authority has characterised itself as “technology-agnostic”, pointing out that it does not regulate technology, but the use of technology and technology’s impact on financial services. The regulator has expressed a commitment to innovation in its approach to AI in compliance contexts, and revealed that it is already using AI tools to detect certain criminal activities. The FCA has invested in the development of AI compliance technology through horizon scanning, synthetic data capabilities, and a “first of its kind” digital sandbox in which firms can test their innovations safely.

It is unclear whether the UK will follow the EU’s regulatory approach but the government has indicated that it will seek to apply principles-based AI regulations and prioritise international harmonisation. In 2021, the UK government published its National AI Strategy, which included details of a proposed regulatory framework that would be “proportionate, light-touch, and forward-looking”. In February 2024, the government published a whitepaper offering further detail on its “pro-innovation approach” to AI regulation.

In April 2024, the UK government began the early-stage discussion of an AI Regulation Bill. The Bill was ultimately paused following the dissolution of the UK parliament for the 2024 general election.

The United States

There is currently no federal regulation of AI in the US, but the Financial Crimes Enforcement Network (FinCEN) has recognised the potential of the technology to enhance anti-money laundering and counter-financing of terrorism strategies, while reducing the cost of compliance. State-level AI regulation standards vary across the US with many state governments enacting, or proposing to enact, transparency-focused requirements to prevent fraud and protect intellectual property rights.

While it has not matched the regulatory pace of other world governments on AI, the Biden administration introduced the Algorithmic Accountability Act in 2022, and an Executive Order on Safe, Secure and Trustworthy AI in 2023. Both articles of legislation require firms to assess the impact of AI systems in order to ensure transparency and fairness, and to share certain information with the government.

In 2022, the Biden administration introduced a blueprint for an AI Bill of Rights. The document represents a set of principles that firms may use to govern the “design, use, and deployment” of AI systems in a manner that aligns with the rights of American citizens.

Cutting-Edge AI Screening

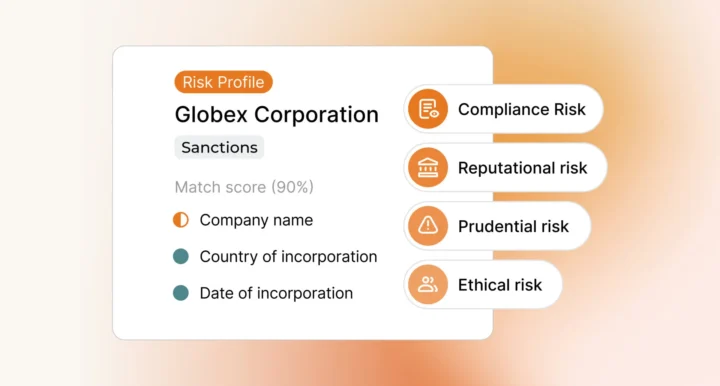

Ripjar’s Labyrinth Screening platform helps firms stay at the cutting-edge of AI in compliance, with proven global screening tools built on decades of regulatory expertise. Labyrinth’s AI-powered screening gives users the power to extract risk-relevant data points from millions of unstructured sources, build in-depth customer profiles in seconds, and use generative AI to create concise prose summaries of each profile in order to speed-up compliance decision-making.

Learn more about Ripjar’s proven, transparent AI screening solutions for AML Compliance

Last updated: 6 January 2025