On 15 February 2024, Ripjar hosted its New York Summit, a luxury, invite-only breakfast event in the heart of Manhattan. The New York Summit brought together senior compliance professionals from around North America and the world to network, share knowledge, and explore the future of compliance.

Ripjar Chief Product Officer Gabriel Hopkins hosted the New York Summit, moderating an expert panel discussion into the challenges and opportunities of AI technology in compliance. The panel included Accenture Financial Crime Lead Blair West, Dow Jones Risk & Compliance Director of SaaS Products Kevin Wolf, Amazon Web Services Financial Services Specialist Alvin Huang, and Ripjar CEO Jeremy Annis.

Let’s take a look at some of the panel’s key highlights:

Panel Discussion

What worries customers most about compliance and anti-financial crime challenges?

On the first question, Blair West pointed to several pain points, the first being the need to keep up with periodic compliance reviews particularly in terms of the volume and quality of data available, and of customer service in relation to onboarding. She also mentioned KYC outreach and managing the addition of new services and products, and finally the effectiveness and efficiency of transaction monitoring, particularly for correspondent banking, securities, and capital markets. Alvin Huang also highlighted the volume and quality of compliance alerts, noting the importance of leveraging technology to address false positives, reduce costs and stay ahead of emerging threats.

Bringing a vendor’s perspective, Jeremy Annis suggested that customers’ main concern is managing the cost of compliance, and that customers often look to vendors to help them integrate compliance innovations, reduce costs, and improve efficiency. Extending that perspective, Kevin Wolf said that vendors must consider customer challenges when building compliance products, ensuring that their solutions have the breadth of coverage required as well as the capacity to deal with increased alert volumes, and that they can scale efficiently.

How are non-banks reacting to recent regulatory penalties?

In the wake of the US Department of Justice fining crypto exchange Binance a record $4.3 billion in 2023, the panel discussed how non-banks should think about their evolving compliance responsibilities. Kevin highlighted that “enforcement drives the behaviour”, raising the importance of good quality data within compliance solutions, and the need for vendors to ensure that non-banks have the resources and solutions they need to make the right compliance decisions – especially in novel industries like cryptocurrency. Blair also focused on cryptocurrency industry compliance, pointing out that crypto exchanges need to carefully consider their risk exposure, work more constructively with regulators, and leverage technology where possible to achieve those goals.

Jeremy highlighted the need for CEOs to take a more active role in the compliance process – especially since they may now be held criminally responsible for violations. Referencing the US in particular, he described an “explosion in corporates taking more of an interest in compliance” with examples of travel and accommodation firms needing to effectively screen sanctions lists and watchlists as part of their Know Your Customer (KYC) responsibilities. Jeremy noted that many non-bank entities now have quite complex compliance risks, and that penalties for failures could be “astronomical”, including fines and prison sentences.

What does the advance of AI technology mean for compliance practitioners?

With the rise of Chat GPT in 2023, Blair noted that the public and finance professionals are feeling more comfortable about AI in compliance contexts – and using it more widely. She pointed to the value of generative AI tools in performing KYC and anti-money laundering (AML) tasks including, in particular, data collection and summarisation, suggesting that the application of AI in these contexts will likely broaden in the future.

Alvin emphasised the need for firms to be able to trust the compliance information that AI tools generate. He suggested that as long as AI platforms assure the traceability of the results they generate, industry confidence in them would continue to grow. Echoing that point, Kevin used the term “explainability”, referencing the need for firms to understand how and why AI tools generate the data that they generate – so that the information can be used properly in compliance contexts.

Despite recent dramatic advances, Jeremy pointed out that there is still a gap between what AI technology can do in compliance contexts, and what customers think it can do. While generative AI has exciting potential, Jeremy suggested that there remains “a suite of problems that need to be solved” regarding the speed, cost, and accuracy of the technology.

What are the major challenges in using AI for compliance?

Jeremy noted that some of the new generation of AI tools are still “tremendously slow” and in some circumstances not useful in providing actionable data. This may especially be the case in name matching contexts where users are searching across tens of millions of data points – such as anti-money laundering (AML) customer name searches. Given that most firms don’t have the resources or budgets to build their own generative AI models to address their specific challenges, Jeremy suggested that the way forward should be to carefully select new AI models that can be customised for a firm’s risk environment, or to work with an appropriate vendor. Firms should then apply those tailored models in ways that eliminate the tedious manual work of compliance while increasing its speed and efficiency.

Alvin agreed that generative AI models are not ideal tools for establishing customer identities. Instead, they are better applied in combination with statistical machine learning in order to analyse and summarise large amounts of unstructured data. Alvin also brought up the tendency for generative AI to generate different answers to the same prompt, or to ‘hallucinate’ answers to prompts – outputs that damage user trust in the technology, and that represent important obstacles to overcome as the technology progresses. With that in mind, Alvin stated that rather than being at the cutting edge of generative AI, it may be prudent for some firms to take a more cautious “follower” approach.

How do regulators view AI compliance technologies?

Blair suggested that, since they have been pushing innovation over the last few years, regulators seem positive about the integration of AI compliance technology “in a controlled and transparent manner”. She stressed the importance of open communication with regulatory agencies (who will also be exploring the technology) as a way to enhance the effectiveness of AI tools in compliance and to shape future guidance and legislation.

Gabriel Hopkins pointed to the example of the US’ Financial Crimes Enforcement Network (FinCEN) that has encouraged firms to use AI as long as they can “prove statistically” that they’re getting better results than their previous non-AI-enabled, rules-based system.

How should we think about model governance in a world of generative AI?

Alvin and Kevin repeated the crucial need for explainability in the integration of AI compliance technology – a need complicated by the increasing complexity of AI models. With that in mind, Gabriel noted that as AI technology has advanced, it has become more difficult to explain to regulators – with model governance becoming a key factor.

Elaborating on that point, Jeremy suggested that effective validation of AI models comes down to some meaningful expectation about what a firm will get out of their system after feeding in certain data – rather than an in-depth understanding of the algorithmic process involved or “how it actually operates in the middle”. That being the case, Alvin stressed the need to re-validate generative AI models constantly, with a focus on the accuracy of the outputs, as a way to maintain confidence. Looking to the future, Blair suggested there might soon be scope for vendors to offer AI model validation as a third party service.

How is AI affecting the adverse media screening landscape?

Kevin emphasised the importance of combining technology and data to derive early warning signals about risk. He pointed out that firms in Europe have had a headstart in the integration of adverse media screening technology into compliance infrastructure, but that its utility and significance is also growing in the US. Alvin noted that the value of adverse media screening has expanded beyond AML risk, with many firms now using it to establish broader customer risk, including environmental, social, and governance (ESG) risk.

Jeremy talked about how AI technology has helped firms carry out adverse media screening at scale, reducing the time the process takes, and its cost. With the benefit of AI, firms are now able to screen thousands, if not millions of customers across global adverse media sources, with a level of noise “not much worse that it would be for any other kind of screening”.

What are your predictions for the application of AI technology in 2024?

With AI technology “not going anywhere”, Kevin reiterated the need for firms to stay at the forefront of discussions about it, in order to maintain their understanding of what it can do, and ensure its explainability within their compliance solution. He pointed out that customer compliance challenges will also continue to grow, and that the development of AI technology will help firms continue on the path to compliance.

Jeremy felt that, after a lack of progress in 2023, the following 12 months will see an increase in AI innovation, with wider mainstream application of the technology. Alvin supported that notion, suggesting that generative AI will increasingly fit into customers’ overall data strategies, with compliance teams able to curate very specific parts of their data. That approach should see AI start to influence general data governance and data strategy.

Blair mentioned the specific application of generative AI to KYC, and to the suspicious activity report (SAR) filing process – with financial institutions leveraging their own internal compliance systems to train the technology. KYC is such a good candidate for AI support because of the number of manual processes it involves – all of which can represent administrative pain points. She suggested that many institutions are already experimenting with the integration of generative AI in KYC AML use cases.

Presentation: Innovations in AI for Compliance

Following the panel discussion, Ripjar CTO Joe Whitfield-Seed hosted a presentation on Ripjar’s next generation Labyrinth Screening platform, complete with its AI Risk Profiles, and recently-launched AI Summaries expansion.

What’s the correct way to apply AI in compliance?

Opening the presentation, Joe looked back over the last century to chart the progression of AI models – from the primitive computers of the 1960s and the very first chatbot, to the development of neural networks in the 1990s and the deployment of the first statistical machine learning systems in the financial services industry. Only in the past few years have generative AI models accelerated, emerging as an extremely sophisticated iteration of the technology that can pass exams, create photorealistic art, and even mimic humans.

However, generative AI often introduces false information in its otherwise-impressive outputs, including factual errors in text responses, extra fingers on images of humans, or incomprehensible characters in images of text. These issues are all symptoms of highly sophisticated AI models failing to properly understand and contextualise their inputs, and so generating inaccurate or misleading responses – issues that would be problematic in compliance contexts.

Adverse media screening challenges

Adverse media screening involves an incredible amount of data collection and analysis. The process requires searches to be conducted across many years of accumulated news content, and across millions of new articles, produced by various global media platforms every day. The screening process must also be able to distinguish irrelevant non-risk-related content, and duplicate articles.

With that in mind, effective adverse media screening solutions must be able to discern risk information that will be key to enabling good compliance decision making. However, even with a solution capable of doing that, firms still face significant screening challenges, including being able to distinguish between search targets with the same or similar names.

Joe gave the example of how a search for a customer with the name “Elizabeth Holmes” would likely be inundated with thousands of articles about the disgraced CEO of Theranos who was jailed for fraud in 2022. Conversely, searches for other customers with the same name would be hampered by the sheer volume of articles about the more famous identity, and subsequently miss tangible risk information. Firms with similarly high profile customers would find it difficult to continuously monitor their target’s true risk level, because they would actively need to sort through thousands of potentially relevant articles about them on a daily basis.

Labyrinth Screening

Joe explained how Ripjar’s Labyrinth Screening platform is designed to address adverse media challenges by facilitating searches that not only account for all available information about a customer, but that are aware of identity, not just risk.

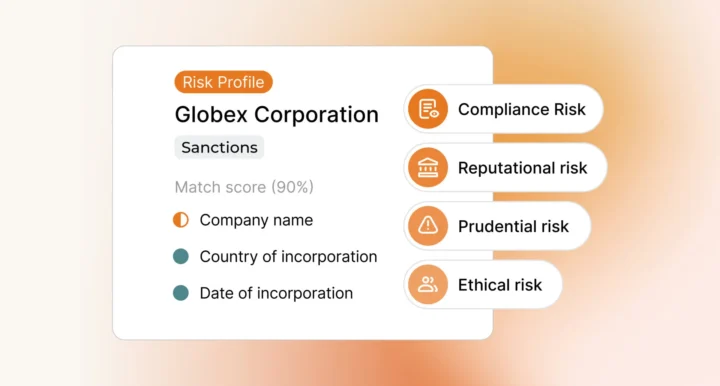

Drawing on around 6 billion news articles from premium providers, Labyrinth Screening identifies relevant news articles in seconds, with searches that take in global news articles, sanctions lists, and watchlists – in 26 languages. Labyrinth Screening is enhanced by Ripjar’s AI Risk Profiles technology which helps compliance teams extract only the most relevant risk data about their search target, and then assign it to a customer risk profile.

In a demonstration, the presentation gave the example of New York mayor Eric Adams. Powered by AI, the Labyrinth search aggregated all available information about the mayor, even from foreign language sources, as part of his risk profile. Labyrinth automatically matched the collected data against watchlist information to indicate that Eric Adams is a politically exposed person (PEP). Meanwhile, AI Risk Profiles collated profiles for other people called “Eric Adams” – reducing the potential for a false positive alert and ensuring Mayor Eric Adams’ profile was accurate and trustworthy. The AI Risk Profiles technology also allowed for navigation between Mayor Adams and his networks and relationships.

By structuring adverse media searches around identity rather than just risk data, Labyrinth Screening reduces potentially billions of input records to tens of millions – and offers compliance teams incredible efficiency savings.

AI Summaries

Joe highlighted how Labyrinth Screening’s value is enhanced further by the addition of the recently-launched AI Summaries expansion. Building on the high quality, trusted information included in a customer AI Risk Profile, AI Summaries integrates generative AI to generate a clear, concise summary of the customer’s adverse media risk – with the potential to reduce assessment times by up to 90%.

The Labyrinth Screening platform demonstrates the compliance possibilities of AI-powered adverse media screening, funnelling a field of billions of documents down to a few paragraphs of meaningful, unstructured text via a high quality validation model.

Last updated: 6 January 2025