On 20 September 2023, Ripjar held an AI in Compliance webinar, welcoming financial crime compliance experts to discuss the impact of emerging trends and technologies on the industry. The exclusive, live-only event was hosted by Ripjar’s Chief Product Product Officer, Gabriel Hopkins, with Michael Heller, Head of Financial Crime Compliance Proposition at Dow Jones, and guest speaker Andras Cser, VP Principal Analyst at Forrester.

With awareness of artificial intelligence in financial crime applications higher than ever, firms around the world are exploring the technology’s potential and its limitations. Focusing on that dynamic, the AI in Compliance webinar involved a guest speaker presentation and a panel discussion, with opportunities for audience members to submit questions to the expert speakers.

Let’s take a closer look at the key insights and discussion points from the webinar:

Introductory Presentation

Guest speaker Andras Cser opened the panel with an introductory presentation offering an industry perspective on the current role of Artificial Intelligence (AI) in compliance.

The Role of AI

The presentation explored uses of AI in the compliance ecosystem and how the technology may help with a range of critical compliance processes – from addressing financial crimes such as money laundering and fraud, to helping enhance regulatory scrutiny, minimising customer friction, and increasing operational efficiency.

AI and machine learning (ML) tools have recently demonstrated “unprecedented improvements” in compliance contexts. These improvements include:

- More standardised, FATE-compliant vendor-developed models.

- Models with fewer re-training requirements that can learn autonomously from analyst and investigator decisions.

- More preventative models that can identify attempts to commit fraud or launder money before they take place.

- Models with better governance and explainability out of the box – simplifying audit requirements

- More cloud/SaaS-delivered fraud management and AML models.

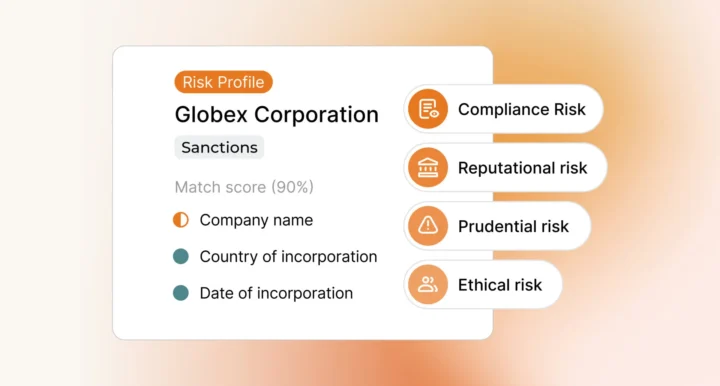

Those advancing capabilities have clear potential for AI-powered Know Your Customer (KYC) and Watchlist Management (WLM) solutions in the following areas:

- Predictive methodologies

- Adverse media and politically exposed person (PEP) screening

- Natural language processing (NLP) and link analysis between entities

- Information processing, discarding irrelevant data points in large quantities of data

- Shortening investigation times

- Filtering and managing watchlists

Supported by AI, these applications promise a wider range of compliance benefits, such as a reduction in false positive alerts, enhanced contextual analysis and risk scoring, and a reduction in the need for employee focus.

AI Best Practices

In order to capitalise on the promise of AI, it’s important that firms understand the best practices for its integration:

- AI model governance needs to be able to prove that challenger models perform better than current models.

- Firms need to use statistical measurements and high quality SDLC processes for building AI models. This may be easier with supervised AI models which are trained and continuously tested against a set of truth data, as opposed to unsupervised models where deductions are made without that reference point.

- Firms must work with regulators, such as FINCEN and FINRA, in the development and application of AI models.

- AI models should be designed for explainability in investigative contexts.

- Firms should keep partial retrainings in sight in order to reduce the need to retrain models from scratch.

The Future of AI

Casting an eye to the horizon, firms may expect the following AI compliance developments:

- Increased adoption of cloud-based analytics and cloud-based delivery methods for watchlist management, and adoption of new transaction monitoring tools for addressing money laundering.

- Increased use of AI in addressing risks in peer-to-peer payments and in cryptocurrency payments.

- Increasing use of NLP to analyse the textual content surrounding transactions and in adverse media stories.

- Advanced link analysis to determine connections between transactions and other data points, such as telephone numbers or addresses.

- Predictive investigation of potential financial activity that exhibits criminal ‘red flags’.

Panel Discussion

Following the presentation, Gabriel Hopkins framed the panel discussion by referencing the renewed “wave of excitement” about AI and machine learning tools in compliance and screening contexts. The discussion went on to cover the recent rise in popularity of generative AI and large language models, and the challenges that firms should expect as they seek to integrate the new technology.

Where do you think we are in the cycle of industry attitudes to AI?

Mike Heller suggested that AI was at a ‘midpoint’ – in the sense that, while there is excitement about its advancing capabilities, there is also concern about its risks, and a push from governments to regulate, as the technology is integrated into business functions. In the financial industry, that trend has given rise to a focus on ‘compliance-ready’ AI – meaning the introduction of tools that are explainable, auditable, and can be tested against the relevant metrics. Compliance-ready AI obviously requires a “significant amount of technical expertise” which will, in turn, require coordination with the regulatory community.

Andras Cser raised the issue of data protection and privacy, and the need for developers to be very careful about how AI tools handle personal data. Generative AI may pose unique new compliance challenges: Cser pointed to the EU’s recent regulatory focus on the unacceptable risks of generative AI, including its potential to manipulate certain groups of people with deep fakes and voice synthesis. Essentially, Cser explained, with the benefit of generative AI, criminals may be able to automate their deception of customers, significantly increasing the scope and effectiveness of their illegal activities.

Where do you see AI having the greatest impact in compliance?

Andras Cser pointed out that AI has been used for a long time in compliance (for example, in risk scoring models), and described its impact as “evolutionary” rather than revolutionary in these contexts. However, he suggested that generative AI might have the greatest impact in the investigative side of compliance. While investigators currently need to have an extensive understanding of the details of a particular case, generative AI has the potential to reduce that administrative burden, and provide guidance, and even assistance, to compliance teams. This trend might include AI systems answering questions or providing resources to help employees work with data and effectively remediate alerts.

The predictive potential of AI will also be important for investigations. AI-enabled systems could be used to automatically identify patterns of behaviour indicative of money laundering or fraud – and alert investigators before the crime takes place.

How will AI continue to improve established compliance processes?

Mike Heller highlighted the strength of AI tools in facilitating compliance screening at scale, including processing information, and analysing data from structured and unstructured sources. Heller re-emphasised the value of compliance-ready AI solutions, referencing Dow Jones’ partnership with Ripjar as a way to harness best-in-class technology for the purpose of screening vast amounts of customer risk data. He also mentioned feature engineering for existing models, with AI enhancing the explainability of certain processes in order to make them more accessible for auditors, regulators, and customers.

How should organisations select their AI vendors and technology?

Andras Cser stressed the need for organisations to view AI models as “starting points”, seeking those with a combination of rules-based decision-making and machine learning features. He also suggested that firms should seek vendors that can provide a comparison of model efficiencies (between current champion and challenger models) in order to understand how a product will ultimately integrate within existing compliance infrastructure.

Are there any AI tools that are white-listed by regulators?

Mike Heller pointed out that while there isn’t a current white-list of AI tools, organisations should survey their surroundings to find out which tools competitors use, how those tools have been tested, and how the systems have fared under review. Gabriel Hopkins noted that regulators have also been pushing organisations towards the integration of AI and machine learning tools as a way to enhance their screening processes. He added that while regulators “don’t want to give people carte blanche” they are nonetheless opening up to the wider use of these solutions.

Andras Cser pointed to the limitations of entirely heuristic compliance, which is particularly vulnerable to criminals that know how to exploit the rules. He characterised AI as the only known long-term strategy for dealing with evolving criminal methodologies, suggesting that regulators and institutions would need to work through initial challenges in order to optimise its use.

How is guidance from organisations like the Wolfsberg Group helpful?

Following advances in AI and machine learning, Mike Heller noted that the Wolfsberg Group’s 2022 Negative News FAQs advised the use of technology as a means to screen against unstructured adverse media data. The move reflects a shift in the expectations of financial regulators towards firms integrating tools capable of matching the increasing sophistication of criminal methodologies – and effectively implies the use of machine learning-enabled technology.

How should institutions approach the issue of explainability in AI models?

Mike Heller stressed the importance of AI providers being able to present documentation on how their model surfaces risk-relevant documentation. Organisations should then take that documentation through an internal review process with their compliance and legal departments to ensure alignment with their policies and risk-appetite. Andras Cser added the notion of feature extraction to that process – essentially as a means to ensure that the vendor is able to deliver an explanation for decisions taken in the AI risk scoring process. Cser went on to mention the benefit of having the vendor help with the operationality of the AI model, splitting responsibility for its management.

Referencing the regulatory strictness of compliance and transaction monitoring requirements, Cser also suggested that AI offers a way to improve on existing models, including developing tools that boost the accuracy and efficiency of risk scoring.

How would you recommend firms prepare for the ‘new wave’ of AI?

Looking back on years of industry experience, Mike Heller, noted a shift in the type of skills needed to manage compliance. Where once legal and regulatory expertise was required, today teams require individuals with project management, engineering, and technical backgrounds who can also be involved in the operational design and development of the tools. The next step may be to hire internal data scientists and AI experts to help bridge the gap between the regulatory and technical functions.

Andras Cser emphasised the need for firms to move slowly in the implementation of new tools, and in understanding what exactly is being integrated. He highlighted the value of data scientists in the new AI landscape, who can assess the compatibility of vendors’ models, and provide a way of governing the development of the technology to ensure challenger models are better than champions. Ultimately, firms should seek to ensure that the basics of their models function as intended, and that their integration aligns with an organisation’s risk appetite.

How are firms handling AI governance?

From a vendor’s perspective, Gabriel Hopkins noted the introduction of centralised model management committees, especially in global banks, as a way for firms to keep boards updated on the implementation of AI models. Expanding on that point, Mike Heller suggested that boards have been scrambling to understand how generative AI tools, such as ChatGPT, will impact their business operations, particularly in terms of compliance. He suggested that institutions that appoint compliance experts at the highest level will be better placed to handle AI integration and to address the challenges that may emerge in the future.

Do you think regulators will expect a level of human input in the regulation of AI?

Taking a vendor’s perspective once again, Gabriel Hopkins suggested that regulators would “absolutely” expect human involvement in the implementation and execution of AI technology, and recommended that firms frame the integration of new AI tools as supporting the efforts of analysts in making compliance decisions.

Echoing that point, Mike Heller remarked that the integration of AI technology will not make compliance easier, but rather make the process faster. The most important, critical decisions will continue to be made by analysts, who will, with the benefit of AI, be empowered to keep pace with criminal methodologies. Heller compared the evolution of AI tools with the history of sanctions compliance, where new technologies previously allowed financial institutions to scale-up their compliance response significantly.

What’s the ‘number one’ takeaway that you’d share about the use of AI in compliance?

Stressing the importance of careful adoption, Mike Heller emphasised the need to keep pace with competitors while bridging the gap between technical and data expertise, and regulatory expectation. Andras Cser returned to the question of explainability, stressing that “explainable AI is always better than inexplicable AI”.

To learn more about Ripjar’s AI compliance and screening technology, get in touch today

Last updated: 6 January 2025